This week we spotlight the nineteenth framework of risks from AI included in the AI Risk Repository:

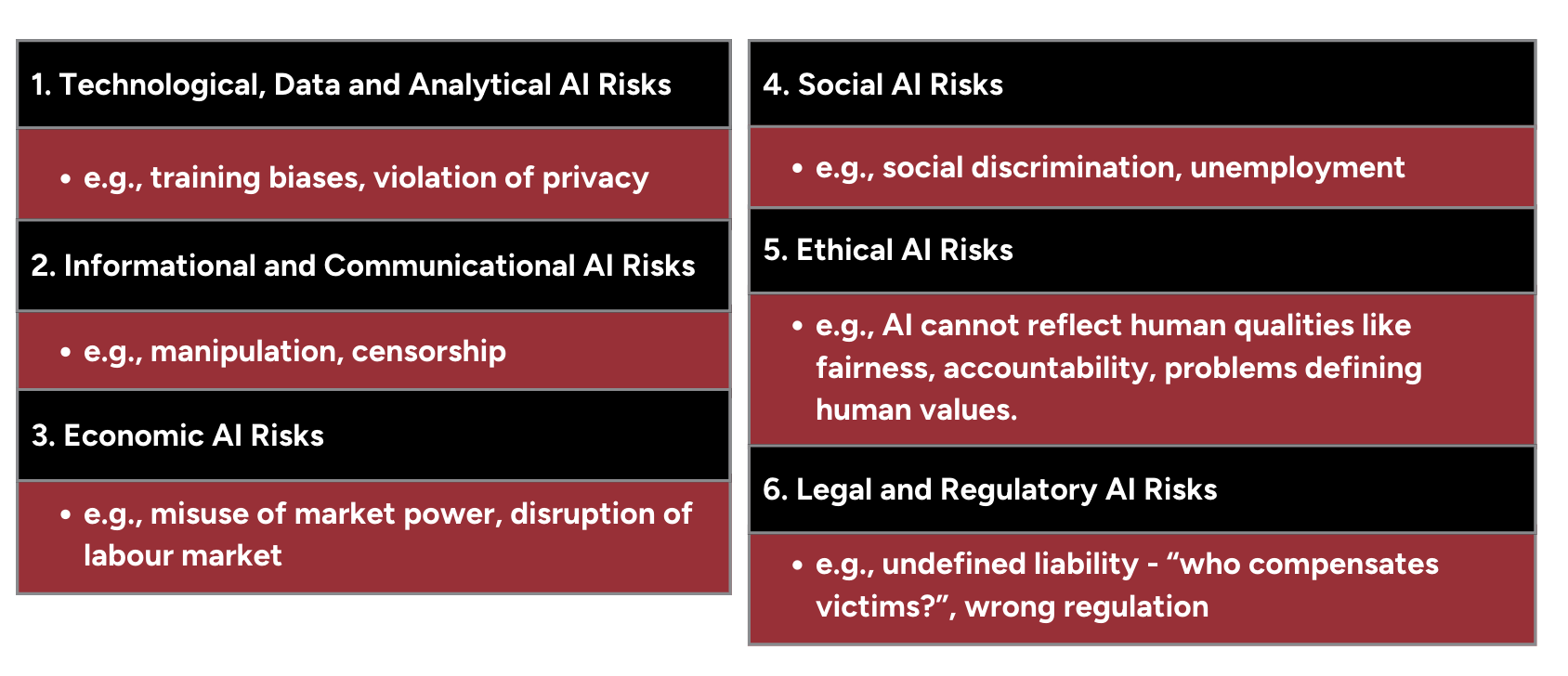

This paper presents a systematic taxonomy consisting of 6 AI risk categories specifically focused on public sector governance. The taxonomy was developed through a systematic literature review that analyzed 1,471 initial records and ultimately included 16 final studies.

The potential for loss of control over AI systems, including autonomous decision-making without human oversight, programming errors due to complexity or lack of expertise, and poor data quality or biases in training data that lead to system malfunctions.

Risk of AI-driven information manipulation, including targeted disinformation campaigns, computational propaganda, algorithmic censorship, and the creation of "filter bubbles" that restrict access to diverse information sources.

Disruption of economic systems through widespread automation, including massive unemployment, loss of taxpayer base, organizational knowledge loss as AI systems replace human workers, and potential collapse of economic structures.

Technological unemployment leading to social unrest, privacy and security threats to individuals and society, growing resistance to AI adoption, and transformation of human-to-human interactions in potentially harmful ways.

AI systems lacking legitimate ethical foundations when making decisions that affect society, AI-based discrimination against certain population groups, and reproduction of human biases and prejudices through AI systems.

Unclear accountability and liability frameworks when AI systems fail or cause harm, inadequate regulatory scope that misses important governance aspects, and the challenge of regulating rapidly evolving AI technologies.

This summary highlights a paper included in the MIT AI Risk Repository. We did not author the paper and credit goes to Bernd W. Wirtz, Jan C. Weyerer, and Ines Kehl. For the full details, please refer to the original publication: https://doi.org/10.1016/j.giq.2022.101685.

→ View all the frameworks included in the AI Risk Repository

→ Sign-up for our project Newsletter