This week we spotlight the 29th framework of risks from AI included in the AI Risk Repository: Habbal, A., Ali, M. K., & Abuzaraida, M. A. (2024). Artificial Intelligence Trust, Risk and Security Management (AI TRiSM): Frameworks, applications, challenges and future research directions. Expert Systems with Applications, 240, 122442. https://doi.org/10.1016/j.eswa.2023.122442

Paper focus

This paper provides a review of the AI Trust, Risk, and Security Management (AI TRiSM) framework, designed to assist organizations with managing the risks associated with AI relating to security, privacy, and ethical issues.

Included risk categories

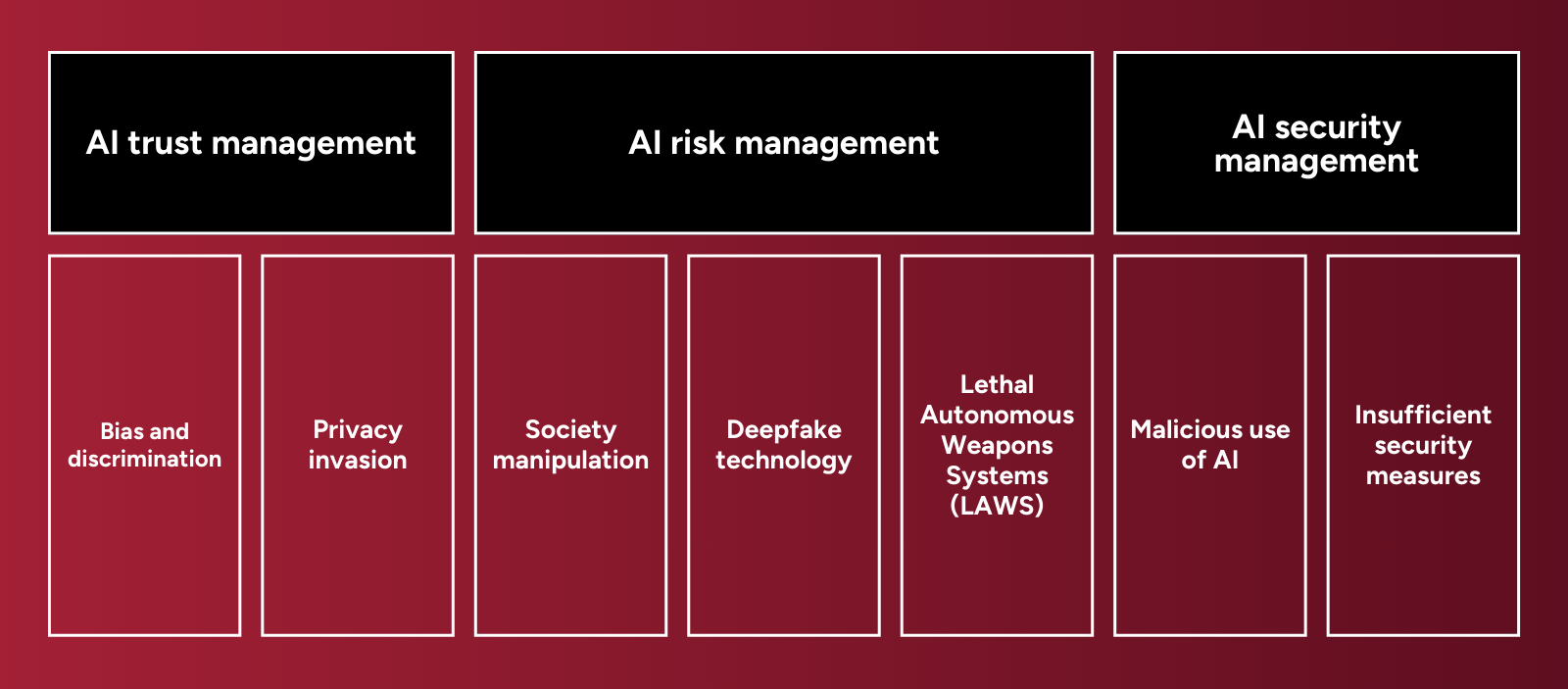

This paper presents an overview of AI challenges organized into the AI TRiSM framework’s three primary aspects, each consisting of distinct threat types and damages.

1. AI trust management

2. AI risk management

3. AI security management

Key features of the framework and associated paper

⚠️Disclaimer: This summary highlights a paper included in the MIT AI Risk Repository. We did not author the paper and credit goes to Adib Habbal, Mohamed Khalif Ali, and Mustafa Ali Abuzaraida. For the full details, please refer to the original publication: https://doi.org/10.1016/j.eswa.2023.122442.

Further engagement

→ View all the frameworks included in the AI Risk Repository