Give feedback on the taxonomy or suggest documents to include

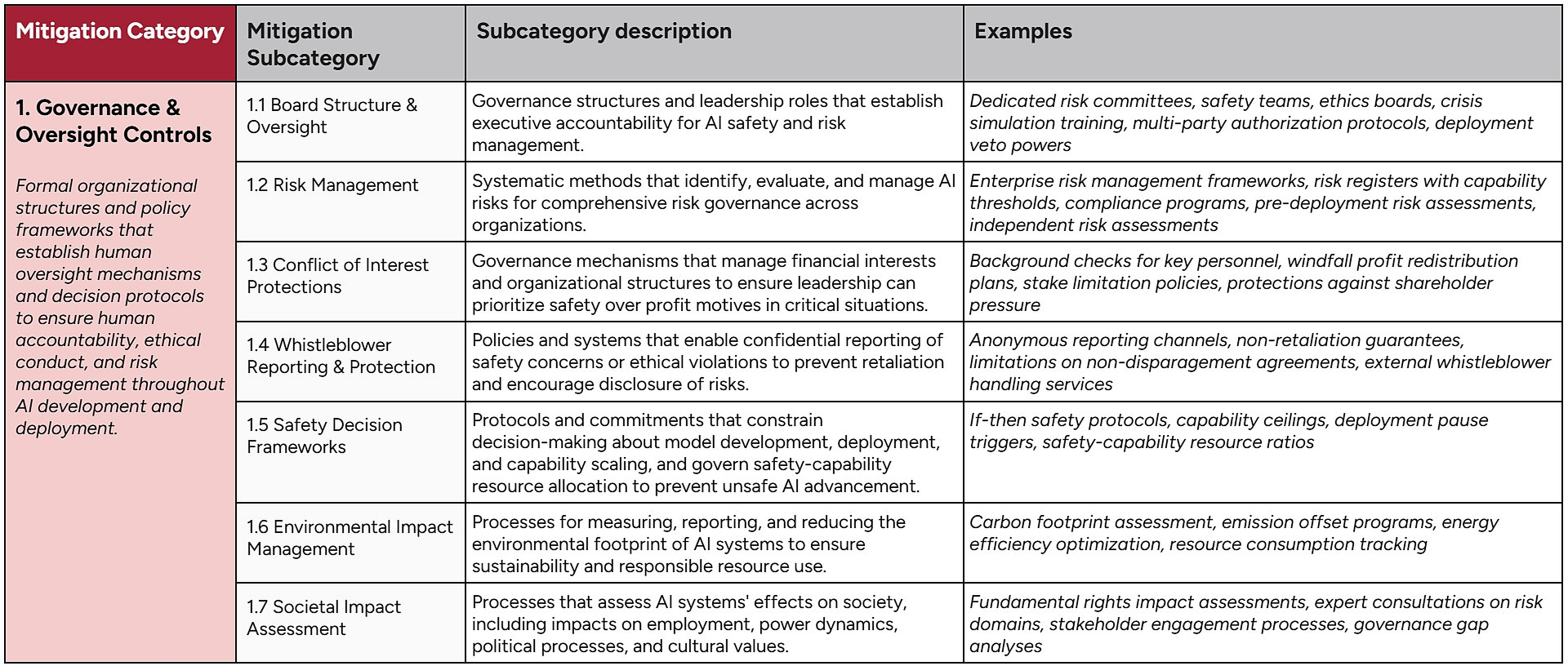

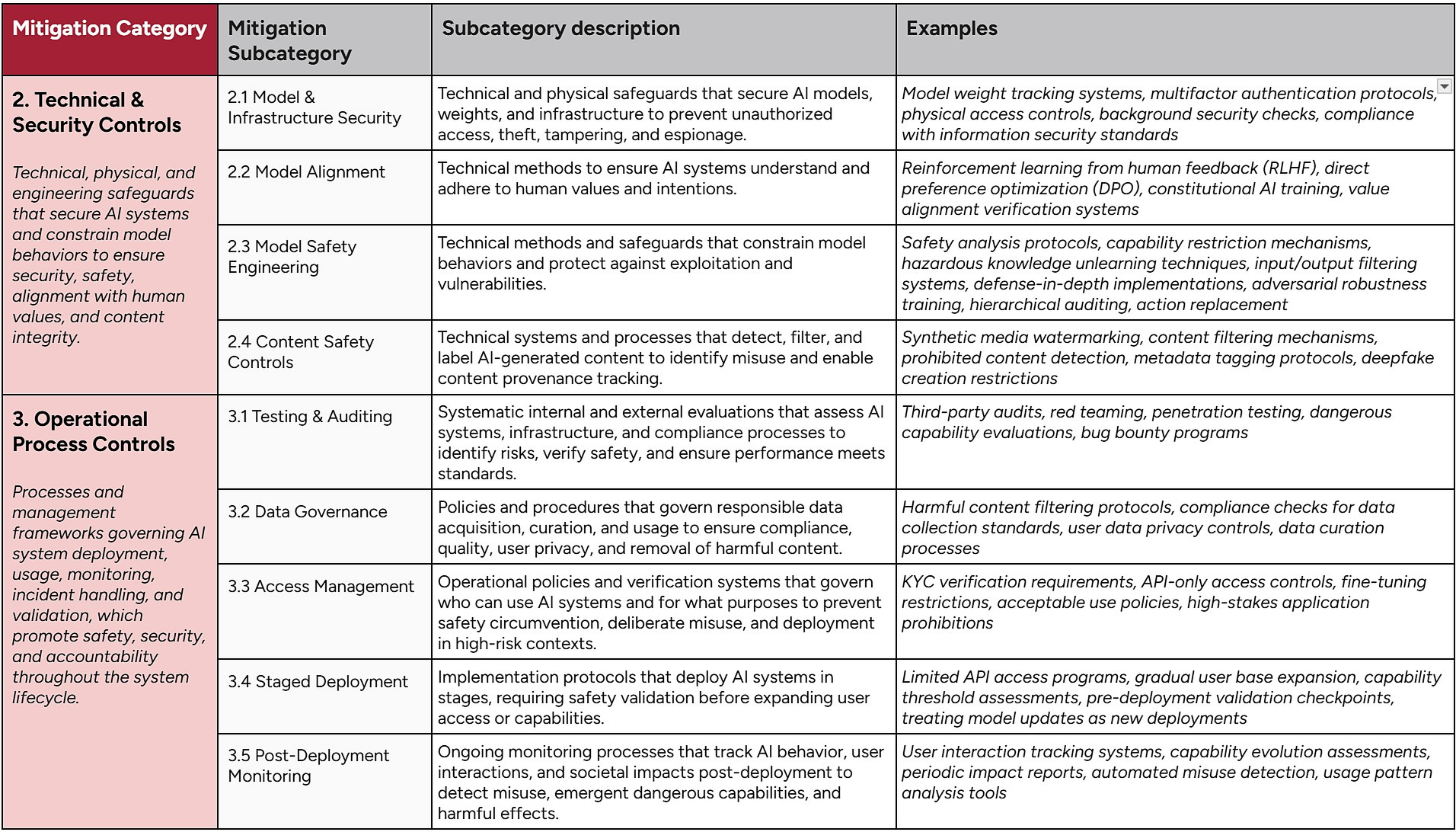

Refer to Appendix A for full draft taxonomy including subcategory descriptions & examples

We conducted this evidence scan to identify & synthesize emerging practice in AI risk mitigations, as part of the broader MIT AI Risk Initiative (airisk.mit.edu).

Risks from AI and proposed mitigations to reduce the likelihood or severity of these risks have been documented in policy, technical, and risk management reports. Some of this work has suggested frameworks or taxonomies to organize mitigations (e.g., NIST AI 600-1 or Eisenberg’s Unified Control Framework). However, each document uses its own jargon and has gaps in coverage; it can also be difficult to know which framework fits the needs of a specific actor or decision-maker.

To address this, we identified relevant documents and extracted specific AI risk mitigations into an AI Risk Mitigation Database, then constructed a draft AI Risk Mitigation Taxonomy.

We release the database and draft taxonomy for comment & feedback now, because:

We intend to follow up this initial evidence scan with a systematic review of AI risk mitigation frameworks, aiming to improve coverage of the database and comprehensiveness and clarity of the mitigation taxonomy.

Overall, our intention with this work - as part of the broader MIT AI Risk Initiative - is to help individuals and organizations understand AI risks, identify and implement effective risk mitigations, and coordinate to reduce systemic and catastrophic risks.

Our review identified 13 relevant documents published between 2023-2025. We started with documents we had identified through previous work (e.g., an evidence scan of AI risk management frameworks; a systematic review of AI risk frameworks), then expanded using:

Potentially relevant documents were screened by the author team before inclusion. We included documents that proposed a framework or other structured list of mitigations for AI risks.

We manually extracted 831 mitigations from the 13 included documents, using the following coding frame:

We defined an AI risk mitigation as “an action that reduces the likelihood or impact of a risk” (adapted from Society for Risk Analysis, 2018; Actuarial Standards Board, 2012; NIST SP 800-30 Rev. 1 from CNSSI 4009; see Appendix B).

Overall, we had three objectives when constructing a mitigation taxonomy:

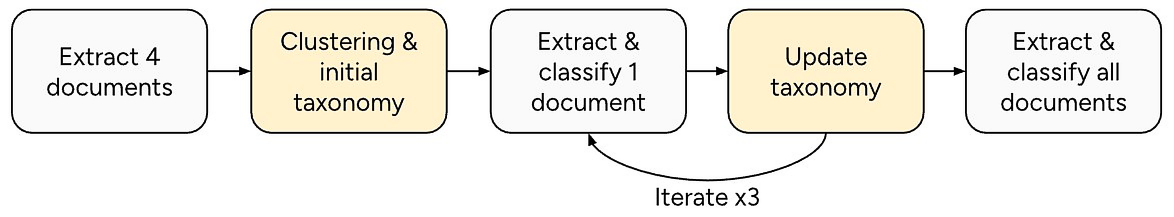

We developed our AI risk mitigation taxonomy using an iterative approach where we extracted and classified mitigations from several documents at a time. Figure 2 describes the process.

We first manually extracted mitigations from 4 documents, and constructed an initial taxonomy based on a thematic analysis and clustering of those mitigations. We placed significant weight on existing categories of mitigations presented in the documents. We experimented with several approaches when we conducted the initial clustering, including:

After we classified all of the extracted mitigations according to the initial taxonomy, we then identified mitigations that could not be classified and gathered internal feedback within the author team. We modified the taxonomy to accommodate the unclassified mitigations, then tested the updated taxonomy on mitigations from one additional document. We repeated this process three times in total.

Over three iterations, we settled on a combination of a system lifecycle and socio-technical approach. However, we think that the other approaches described above (e.g., risk management, etc) could also be useful taxonomies to explore.

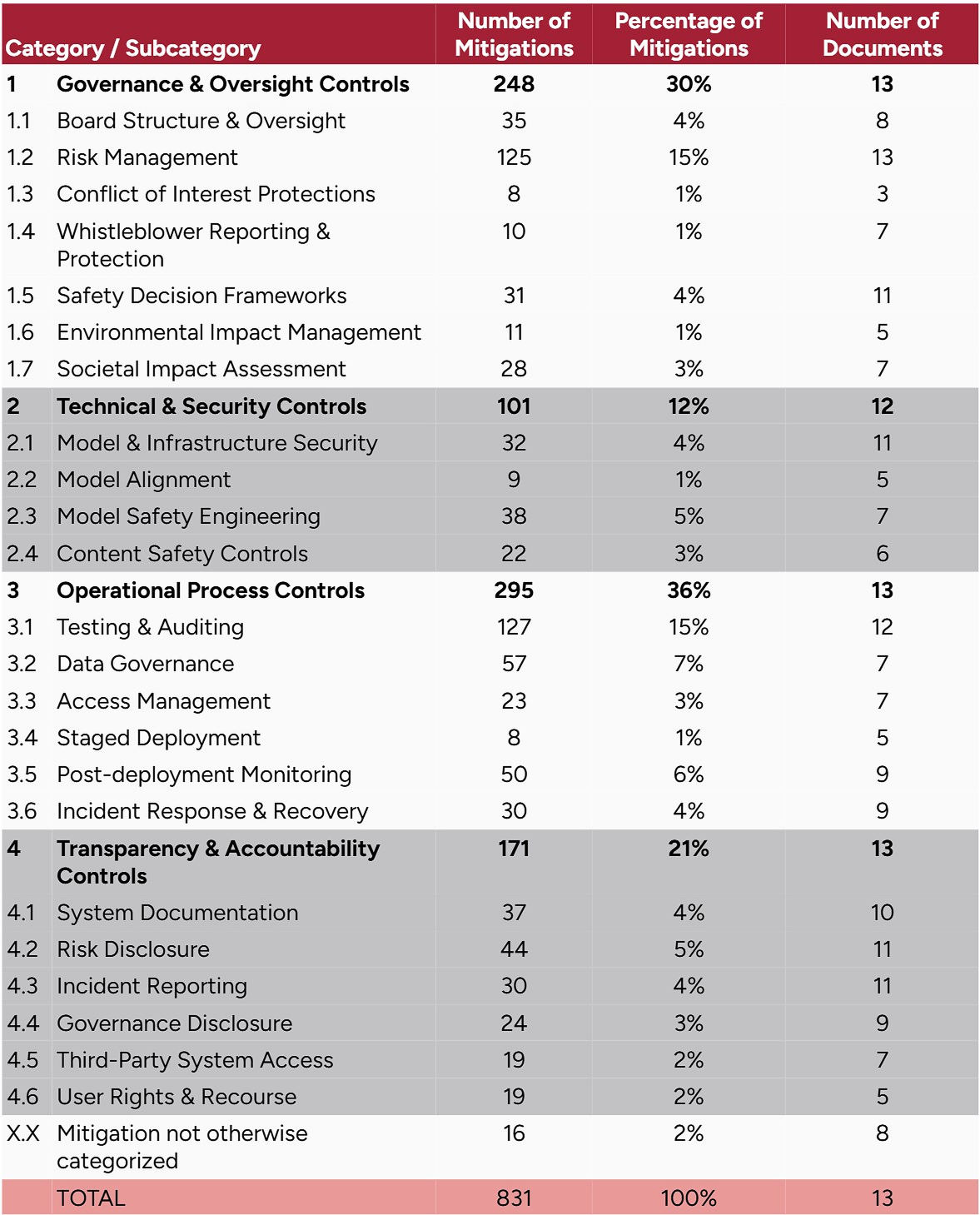

Our final draft taxonomy clustered mitigations into four categories (Governance & Oversight, Technical & Security, Operational Process, and Transparency & Accountability) and 23 subcategories (see Appendix A for the full taxonomy, or explore an interactive taxonomy).

All remaining mitigations from the documents were then classified using the final draft taxonomy. We classified 815 mitigations (98%) of the extracted mitigations using the final draft taxonomy. 16 mitigations could not be classified.

In this evidence scan, each author involved in extraction and classification experimented with LLM / AI assistants in their work. Some examples of how we used AI assistants:

We originally planned to heavily leverage LLM / AI assistance for extraction & classification, but experienced several problems with the quality and consistency of outputs. We discovered partway through our extraction & classification of the documents that the AI assistants:

When we identified these issues, we conducted quality assurance on the extracted and classified mitigations through a detailed audit of the mitigations database. This involved manually reviewing the included documents to verify that all mitigations had been accurately extracted and that no spurious entries had been added.

After verifying the extractions, a member of the author team reviewed all classifications. This involved reading each mitigation name & description, reviewing the AI-proposed classification & justification, and either accepting the proposal or changing the classification (and documenting their justification for doing so). Multiple team members cross-checked classifications to ensure consistency.

We include this report on the problems of using AI assistance to accelerate evidence synthesis for transparency and to highlight the current need for careful human validation of AI assistance to ensure that research findings are accurate.

The distribution of mitigations across each of our taxonomy categories and subcategories is shown in Table 1.

In this section, we discuss:

We classified 125 (15%) of all identified mitigations as ‘risk management’ using our draft taxonomy, but there were many different processes and actions that could be classified under this umbrella term.

We believe that this reflects that ‘AI risk management’ is an emerging concept; the boundaries of risk management - and what should be included or excluded as a risk management action - are not yet settled. In addition, we believe that the category of risk management in our draft taxonomy needs to be decomposed and clarified.

In 2023, Schuett et al. conducted an expert survey of leaders from AGI companies, academia, and civil society. Experts were asked to agree, disagree, or express uncertainty about 50 different AGI safety practices. Of all the practices surveyed, enterprise risk management was the statement with the highest “I don’t know” rate (26%); as Schuett et al. remark, this “indicates that many respondents simply did not know what enterprise risk management is and how it works”.

In contrast, some of the included documents in our evidence scan explicitly defined risk management (e.g., NIST, 2024; Campos et al., 2025; Gipiškis et al., 2024; Bengio et al., 2025; see Appendix B for details).

These definitions typically included several related stages or functions, including identifying risks, assessing / evaluating risks, and implementing measures to reduce risks. Some also emphasized the role of governance - rules, procedures, or culture - to provide structure to or sustain the other stages or functions, or monitoring AI system behavior once it is deployed and being used.

On the basis of these descriptions, we defined 1.2 Risk Management in our draft taxonomy as:

Systematic methods that identify, evaluate, and manage AI risks for comprehensive risk governance across organizations.

However, once we started classifying mitigations from the included documents, we found that many of them could be classified as risk management, based on this definition. These included mitigations as varied as conducting adequacy assessments of a lab’s safety and security frameworks (EU AI Office 2024); implementing System-Theoretic Process Analysis (STPA; Gipiškis 2024); and updating due diligence processes when purchasing generative AI systems (NIST, 2024).

Our finding that many mitigations can be classified under risk management, the emerging conceptualization of AI risk management, and the inconsistency between current understanding (Schuett et al., 2023) and best-practice proposals (e.g., NIST, 2024; Campos et al., 2025), suggests two potential challenges to effectively addressing risks from AI:

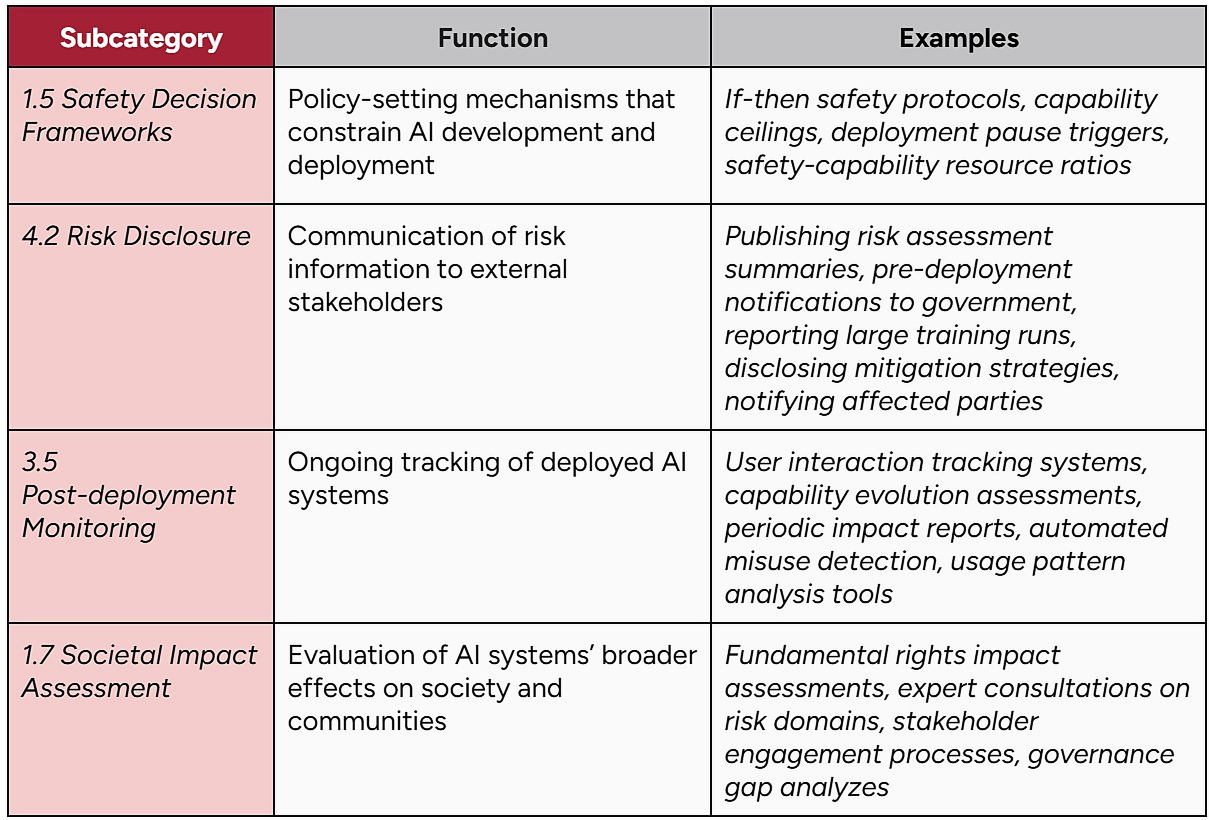

Our draft taxonomy, developed through iteration to comprehensively classify all of the mitigations extracted from the included documents, likely requires further decomposition of risk management as a (sub)category of mitigations. For example, other existing subcategories have conceptual overlap with the specific functions of risk management (e.g., identifying, analyzing, enacting measures, governing and/or monitoring), including safety decision frameworks (1.5), risk disclosure (4.2), and post-deployment monitoring (3.5).

We would welcome feedback to disambiguate ‘risk management’ as a category of mitigations. We are considering the following directions:

We classified 127 (15%) of all identified mitigations as ‘testing & auditing’ using our draft taxonomy; it was the most commonly mentioned subcategory of mitigations.

We defined 3.1 Testing & Auditing in our draft taxonomy as: Systematic internal and external evaluations that assess AI systems, infrastructure, and compliance processes to identify risks, verify safety, and ensure performance meets standards.

As with risk management, we noticed that testing & auditing could be used to classify many different actions proposed in the included documents. Extractions from the documents indicate that testing and auditing can be quite wide-ranging, encompassing red teaming, external and internal audits, benchmarks, and bug bounty programs (Campos 2025; Schuett et al. 2023).

In addition, other existing subcategories have conceptual overlap with some of the activities described under testing & auditing, including model risk management (1.2), safety engineering (2.3), and third-party system access (4.5).

We would welcome feedback to disambiguate ‘Testing & Auditing’ as a category of mitigations. We are considering the following directions:

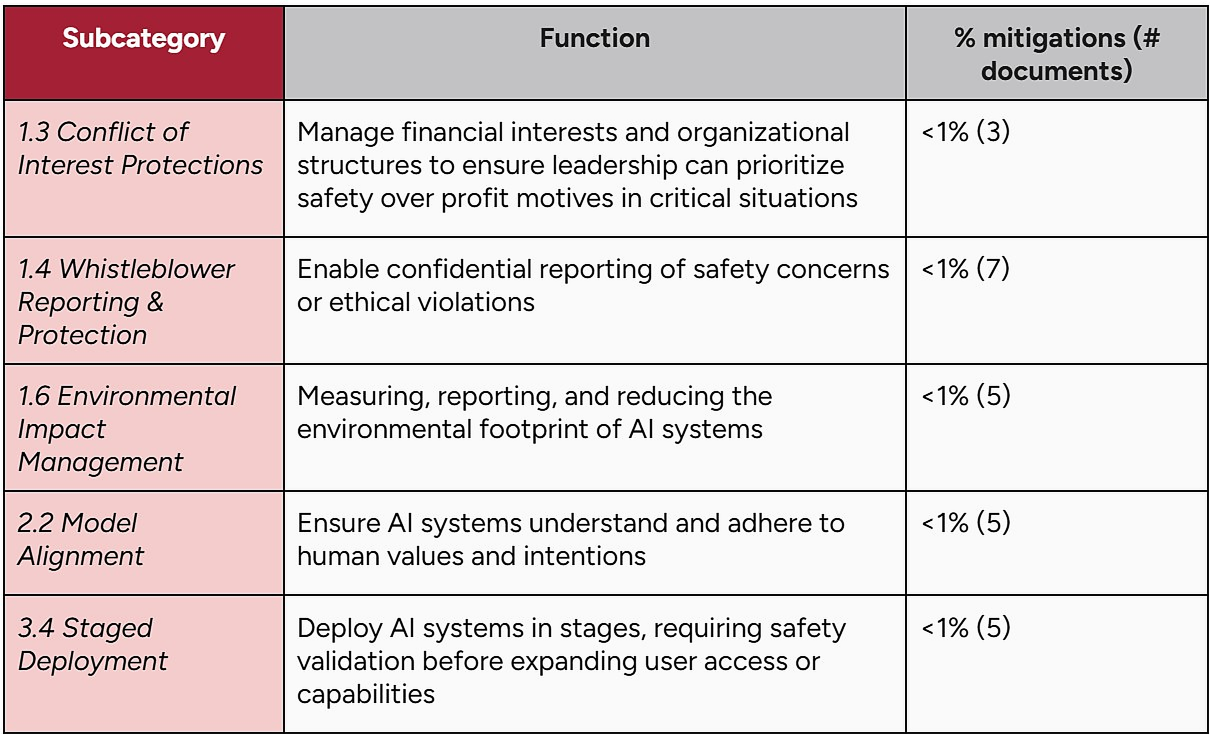

Several categories of mitigations received limited attention in the included documents. We wish to stress that the frequency of with which a mitigation is classified does not necessarily reflect its relative importance or rate of adoption in practice; we also recognize that our decisions in drawing category boundaries will influence the relative frequency of mitigations in each category.

However, these findings suggest that some of these categories of mitigation could be investigated, developed, and defined in more detail. Table 4 presents these mitigation categories.

We wish to draw special attention to 2.2 Model Alignment. Actions to align AI models and systems with human goals & values were rarely mentioned, despite their fundamental importance in addressing catastrophic and existential risks from advanced AI systems.

Our evidence scan, development of the draft AI risk mitigations database, and construction of the draft taxonomy, suggests several directions for applied research. We welcome feedback on these proposed directions.

Many of the mitigations extracted from the documents did not specify what type of AI risk they were intended to address. This is a problem, given that an actor that seeks to address misinformation risks would likely need to implement a different mitigation than one that seeks to address AI possessing dangerous capabilities. Not all actors are vulnerable to or accountable for addressing all AI risks.

In our previous work, the AI Risk Repository, we constructed taxonomies to classify risks: by causal factor and by domain of harm. These and other taxonomies of risk are starting to be mapped to other structured knowledge about AI, including legislation and standards (e.g., ETO AGORA), incidents of harm (e.g., AI Incident Database), and comprehensive ontologies to structure knowledge on AI risks (e.g., the AI Risk Ontology; The IBM Risk Atlas Nexus).

We strongly believe that a mapping of AI risks to mitigations is needed to advance coordinated action on AI risks. Some of the included documents do propose relationships between risks and mitigations, including NIST AI 600-1 (2024) and especially Gipiškis et al. (2024). Gipiškis and colleagues identify risk sources and link them to corresponding risk measures; a promising approach for addressing this gap. Developing or linking the risk sources and measures to taxonomies and ontologies can help to increase its interoperability with other work that seeks to structure knowledge on AI risks and mitigations. Table 5 illustrates examples of risk sources and measures from Gipiškis et al. (2024).

Note: this table represents only a subset of all the risk sources and corresponding measures identified by Gipiškis et al. 2024, sampled for illustrative purposes.

In this evidence scan, we searched for documents that discussed AI risk mitigations. The documents we found and included tended to focus on organizations that were developing and deploying the most advanced AI systems (e.g., “frontier AI”, “generative AI”, “general-purpose AI”). However, we lack an understanding of actions that other actors could take to mitigate AI risks.

It could be useful to identify proposed and enacted actions by these actors, and how they reinforce, substitute, or undermine others’ actions to mitigate AI risks.

A non-exhaustive set of examples of such actors whose actions could be investigated include:

In risk management frameworks and in our draft taxonomy, there is a focus on measurable and observable actions that fit within a typical conceptualization of what organizations ‘do’.

We think that investigating the organizational conditions that reduce risks from AI could be an important contribution and one that sits in tension with our focus on classifying mitigations, which naturally privileges observable actions.

One example of supportive organizational conditions is a positive company safety culture, mentioned in several included documents but especially in Campos (2025) where it is framed as part of the risk governance component of frontier AI risk management.

Other examples could include:

Access detailed bibliographic information about included documents on Airtable

Access all included documents in a public Paperpile folder

The first comprehensive international synthesis of evidence on AI risks and their relevant technical mitigations, mandated by nations attending the AI Safety Summit in Bletchley Park. This report represents a global collaboration of 30 nations and is the culmination of 100 AI experts’ efforts to establish a common understanding of frontier AI systems.

Bengio, Y., et al. (2025). International AI safety report. UK AI Safety Institute. https://www.gov.uk/government/publications/international-ai-safety-report-2025

A recent framework proposing an integrated risk management strategy for frontier AI risks, emphasizing the gap between current AI practices and established risk management methodologies from high-risk industries. The approach consists of four stages: (1) risk identification, (2) risk analysis and evaluation, (3) risk treatment, and (4) risk governance.

Campos, M., Stewart, A., & Zhang, R. (2025). A frontier AI risk management framework: Bridging the gap between current AI practices and established risk management. arXiv. https://arxiv.org/pdf/2502.06656

A comprehensive framework establishing 42 controls for enterprise AI governance, risk management, and regulatory compliance. The framework unifies fragmented approaches into a cohesive system for managing AI risks across organizations.

Eisenberg, C., Seaver, A., & Rubin, J. (2025). The unified control framework: Establishing a common foundation for enterprise AI governance, risk management and regulatory compliance. arXiv. https://arxiv.org/abs/2503.05937

A systematic analysis identifying key risk sources for general-purpose AI systems and corresponding management measures, developed to support global standardization efforts.

Gipiškis, D., et al. (2024). Risk sources and risk management measures in support of standards for general-purpose AI systems. arXiv. https://arxiv.org/abs/2410.23472

A survey of experts ranging from AI safety to CBRN risks to determine the most effective risk management practices for general-purpose AI models. Three mitigations emerge as especially salient: safety incident reports and security information disclosure, third-party pre-deployment model audits, and pre-deployment risk assessments. Policy guidance is offered for delivering the most crucial measures to mitigate risks from frontier AI systems.

Uuk, R., Lam, H., Simeon, V., & O'Brien, N. (2024). Effective mitigations for systemic risks from general-purpose AI. arXiv. https://doi.org/10.48550/arXiv.2412.02145

The 2024 FLI AI Safety Index conducted a comprehensive review of six prominent AI companies by an independent panel of seven technical AI and governance specialists. Key findings include significant risk management discrepancies across the companies, vulnerability to adversarial attacks, lack of control protocols, and insufficient external oversight. Mitigation strategies are recommended to counteract these AI risk deficiencies.

Future of Life Institute. (2024). FLI AI safety index 2024. Future of Life Institute. https://futureoflife.org/document/fli-ai-safety-index-2024/

A survey of expert opinion from leading AI companies including OpenAI, Google DeepMind, and Anthropic on best practices for AGI safety and governance. The paper identifies 51 specific practices ranging from technical safety measures to governance structures.

Schuett, J., Dreksler, N., Anderljung, M., McCaffary, D., Heim, L., Bluemke, E., & Garfinkel, B. (2023). Towards best practices in AGI safety and governance: A survey of expert opinion. arXiv. https://arxiv.org/abs/2305.07153

A critique of the outsized emphasis on evidence-based AI policy, which can discourage regulatory action in the face of uncertain risks. Challenging such stagnancy, the authors maintain that uncertainty is cause for passing regulation in the near term. They recommend mitigation strategies that are grounded in but not overreliant on empirical findings, spanning AI governance institutes to shutdown procedures for AI systems.

Casper, S., et al. (2025). Pitfalls of evidence-based AI policy. arXiv. https://arxiv.org/abs/2502.09618

The implementing guidance for the EU AI Act's requirements on general-purpose AI systems, detailing specific measures for systemic risk assessment, mitigation, and governance.

EU AI Office. (2025). EU AI Act: General purpose AI code of practice (Draft 3). European Commission. https://code-of-practice.ai/

A comprehensive catalog of safety practices being implemented or considered by frontier AI organizations, providing transparency into current industry approaches to risk management.

UK Department for Science, Innovation and Technology. (2023). Emerging processes for frontier AI safety. https://www.gov.uk/government/publications/emerging-processes-for-frontier-ai-safety

US National Institute of Standards and Technology (NIST) guidance adapting the AI Risk Management Framework specifically for generative AI, containing detailed controls and implementation guidelines.

National Institute of Standards and Technology. (2024). Artificial intelligence risk management framework: Generative artificial intelligence profile (NIST AI 600-1). NIST. https://nvlpubs.nist.gov/nistpubs/ai/NIST.AI.600-1.pdf

A profile aligned with the US NIST AI Risk Management Framework providing risk management standards specifically tailored for general-purpose AI systems and foundation models, bridging multiple existing frameworks.

Barrett, A. M., Newman, J., Nonnecke, B., Hendrycks, D., Murphy, E. R., & Jackson, K. (2024). AI risk management standards profile for GPAIS. UC Berkeley Center for Long-Term Cybersecurity. https://cltc.berkeley.edu/wp-content/uploads/2023/11/Berkeley-GPAIS-Foundation-Model-Risk-Management-Standards-Profile-v1.0.pdf

Proposed legislation establishing safety requirements for organizations developing frontier AI models - specifically, models which cost more than $100 million to train, with compute of >10^26 FLOP (or fine-tuned models of similar size). Though ultimately vetoed by the Governor of California, SB 1047 set important precedents for AI regulation.

Wiener, S. (2024). California State Senate Bill 1047: Safe and Secure Innovation for Frontier Artificial Intelligence Models Act. California State Legislature. https://leginfo.legislature.ca.gov/faces/billNavClient.xhtml?bill_id=202320240SB1047

We define a ‘risk’ as the possibility of an unfortunate occurrence

We define a ‘mitigation’ as an action that reduces the likelihood or impact of a risk

“Prioritizing, evaluating, and implementing the appropriate risk-reducing controls/countermeasures recommended from the risk management process”

Bengio et al. (2025), in International AI Safety Report 2025, defined AI risk management as: The systematic process of identifying, evaluating, mitigating and monitoring risks.

Campos et al. (2025), in A Frontier AI Risk Management Framework propose a framework for frontier AI risk management that includes four components:

NIST (2024), in Artificial Intelligence Risk Management Framework: Generative Artificial Intelligence Profile (NIST AI 600-1) describes AI risk management as including four functions (consistent with NIST AI 100-1):

Gipiškis et al (2024) define an AI risk management measure (which is embedded within a broader safety engineering process) as: a measure that is designed to lower risk, either when applied alone or in combination with other measures. This can include identification, mitigation, or prevention of risk sources or individual risks relevant to a given system or class of systems

Sophia's work on this project was funded by the CBAI Summer Research Fellowship.