This week we spotlight the 26th framework of risks from AI included in the AI Risk Repository: AI Verify Foundation. (2023). Summary Report for Binary Classification Model of Credit Risk. AI Verify Foundation. https://aiverifyfoundation.sg/downloads/AI_Verify_Sample_Report.pdf

Paper focus

This paper is a sample summary report demonstrating the use of the AI Verify Testing Framework. This framework is the basis for a toolkit used by organizations to systematically evaluate responsible AI practices during the deployment of their traditional and generative AI applications. The AI Verify Testing Framework was developed through consultation with companies from different sectors and is aligned with other international AI governance frameworks from the ASEAN, European Union, OECD, and USA.

Included risk categories

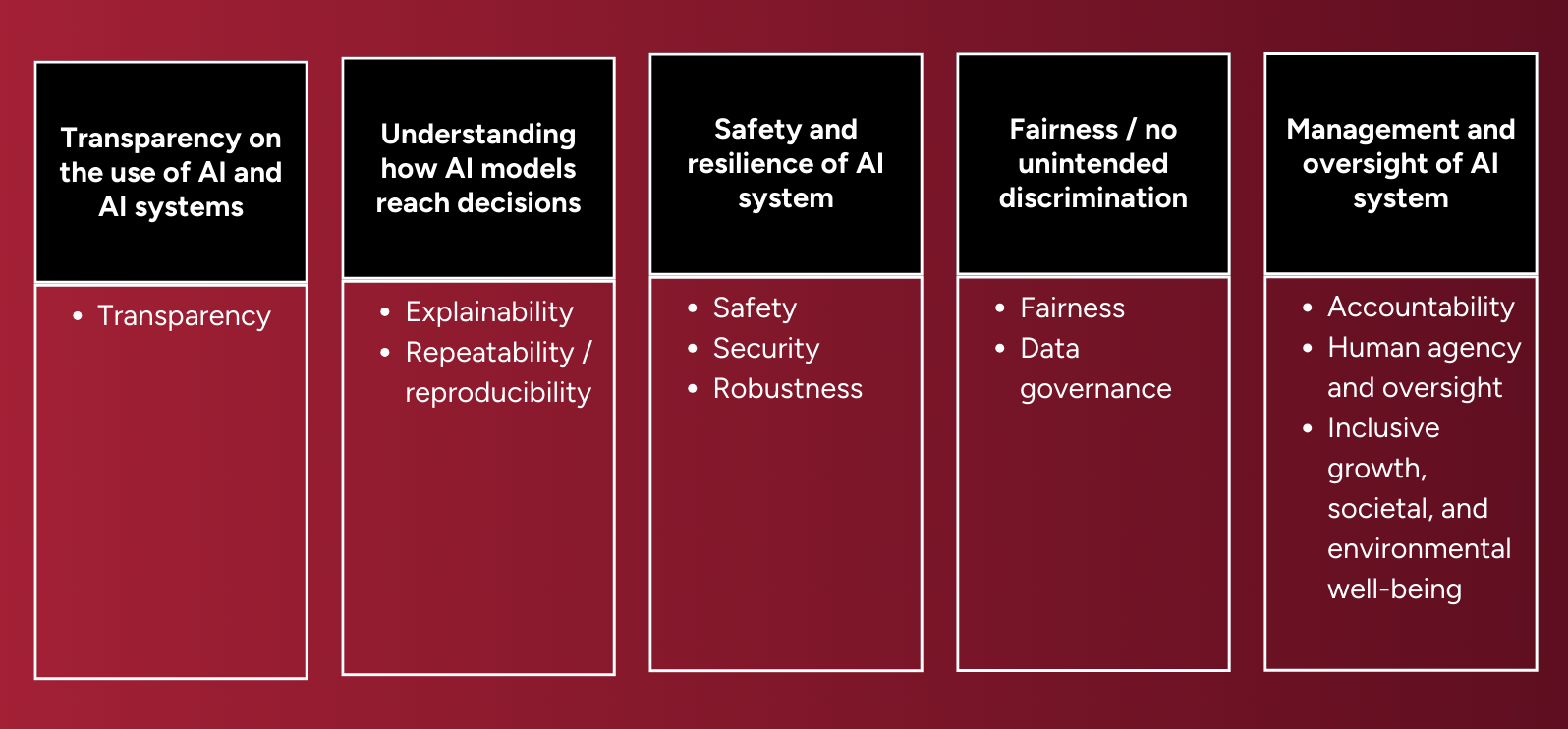

The AI Verify Testing Framework consists of 11 AI ethical principles, grouped into 5 areas:

1. Transparency on the use of AI and AI systems

2. Understanding how AI models reach decisions

3. Safety and resilience of AI system

4. Fairness / no unintended discrimination

5. Management and oversight of AI system

Key features of the framework and associated paper

⚠️Disclaimer: This summary highlights a paper included in the MIT AI Risk Repository. We did not author the paper and credit goes to the AI Verify Foundation. For the full details, please refer to the original website and sample report: https://aiverifyfoundation.sg/downloads/AI_Verify_Sample_Report.pdf.

Further engagement

→ View all the frameworks included in the AI Risk Repository