This week we spotlight the twenty-second framework of risks from AI included in the AI Risk Repository: Hendrycks, D., Mazeika, M, & Woodside, T. (2023). An Overview of Catastrophic AI Risks. In arXiv [cs.CV]. arXiv. http://arxiv.org/abs/2306.12001

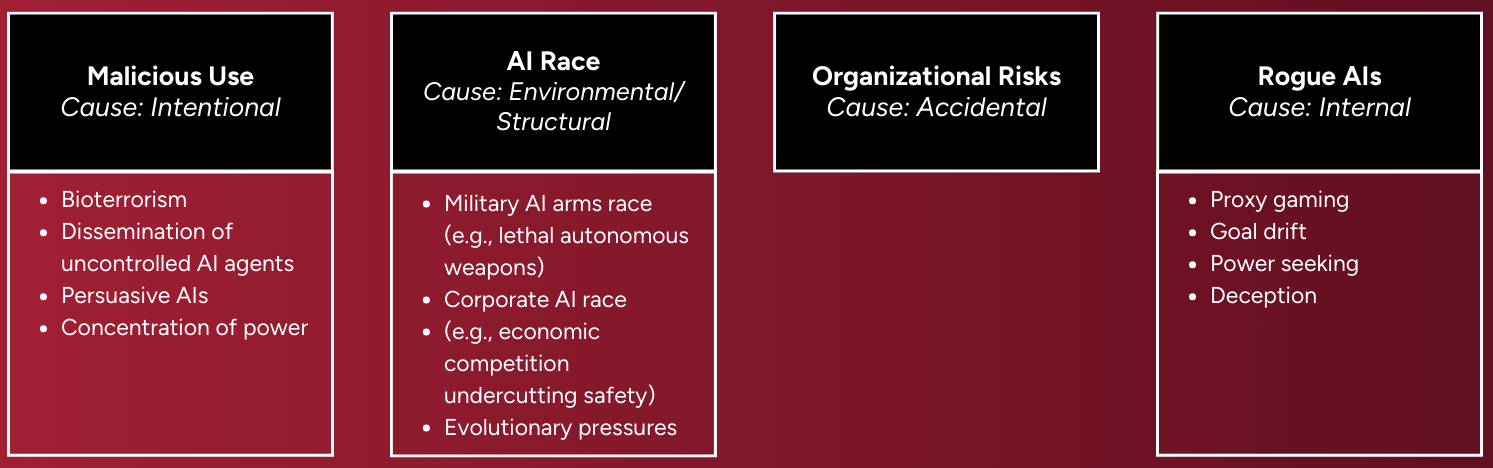

Paper Focus: This paper provides an overview of the sources of catastrophic risks from AI, which are organized into four main categories. Each of these corresponds with a proximate cause: intentional, environmental/structural, accidental, and internal. The authors also present illustrative hypothetical scenarios and practical risk mitigations associated with each category of catastrophic AI risk.

1. Malicious use (cause: intentional) refers to malicious actors using advanced AI to cause harm:

2. AI race (cause: environmental/structural) refers to competitive pressures for corporations and nations to develop and deploy AIs in unsafe ways:

3. Organizational risks (cause: accidental) refers to to catastrophic accidents arising in the development and deployment of advanced AI, due to human factors and complex systems

4. Rogue AIs (cause: internal) refers to the loss of control of AI as they become more intelligent than humans

Key features of the framework and associated paper:

⚠️Disclaimer: This summary highlights a paper included in the MIT AI Risk Repository. We did not author the paper and credit goes to Dan Hendrycks, Mantas Mazeika, and Thomas Woodside. For the full details, please refer to the original publication: https://arxiv.org/abs/2306.12001.

Further engagement

→ View all the frameworks included in the AI Risk Repository