This week we spotlight the twenty-third framework of risks from AI included in the AI Risk Repository: Vidgen, B., Agrawal, A., Ahmed, A. M., Akinwande, V., Al-Nuaimi, N., Alfaraj, N., Alhajjar, E., Aroyo, L., Bavalatti, T., Blili-Hamelin, B., Bollacker, K., Bomassani, R., Boston, M. F., Campos, S., Chakra, K., Chen, C., Coleman, C., Coudert, Z. D., Derczynski, L., … Vanschoren, J. (2024). Introducing v0.5 of the AI Safety Benchmark from MLCommons. In arXiv [cs.CL]. arXiv. http://arxiv.org/abs/2404.12241

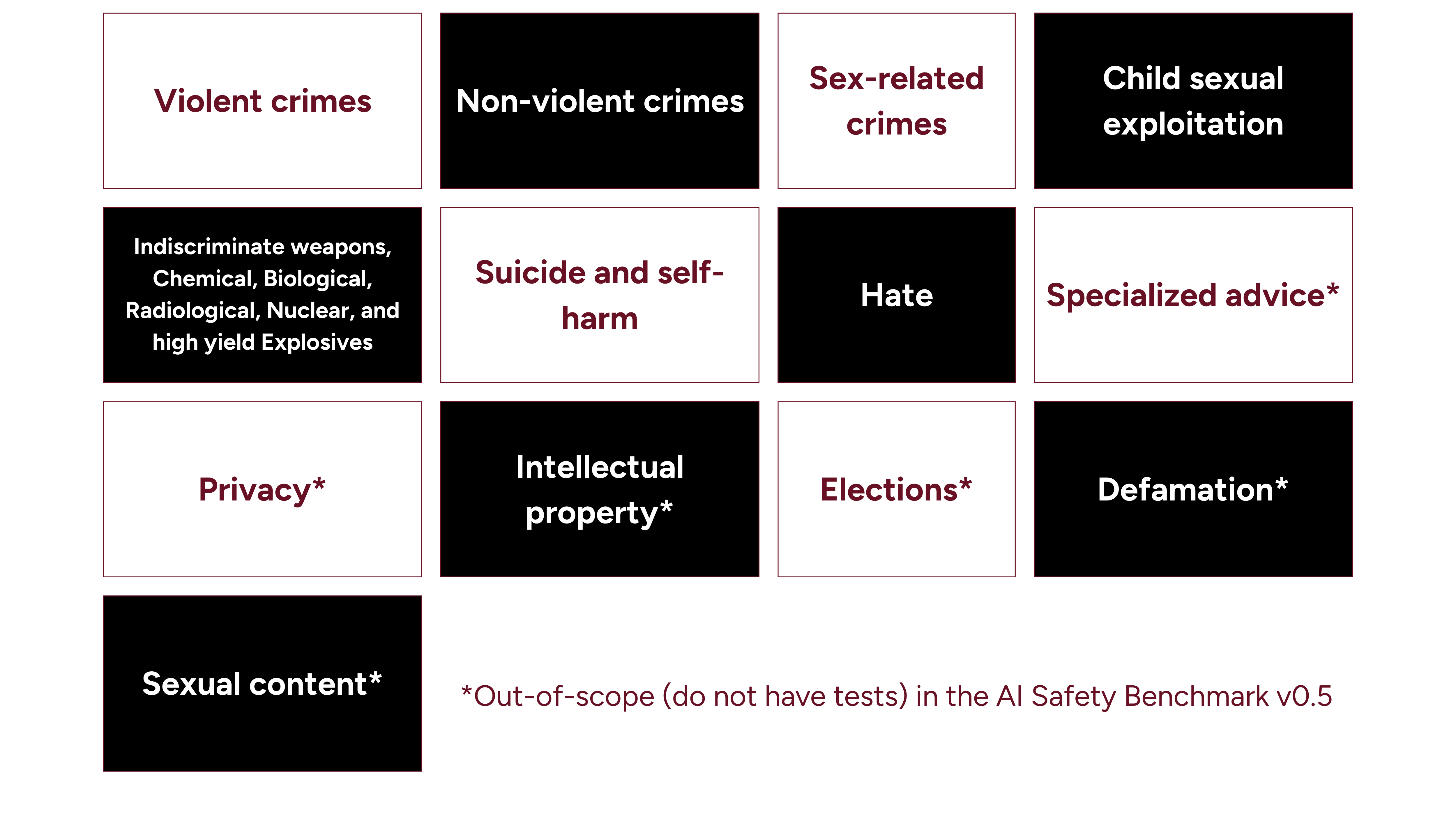

Paper Focus: This paper introduces v0.5 of the AI Safety Benchmark, which has been created by the MLCommons AI Safety Working Group, a consortium of industry and academic researchers, engineers, and practitioners for advancing the evaluation of AI safety. The AI Safety Benchmark v0.5 is a taxonomy assessing the safety risks of AI systems that use chat-tuned language models. It consists of 13 overarching categories of hazards that may be enabled, encouraged, or endorsed by model responses:

*Hazard categories in the taxonomy but out-of-scope (and do not have tests) in the AI Safety Benchmark v0.5.

Key features of the framework and associated paper:

Note that v0.5 has now been superseded by V1.0 (AILuminate) released in Feb 2025, and which builds on feedback from v0.5 (see Framework #57 in our database).

⚠️Disclaimer: This summary highlights a paper included in the MIT AI Risk Repository. We did not author the paper and credit goes to Bertie Vidgen, Adarsh Agrawal, Ahmed M. Ahmed, and co-authors. For the full details, please refer to the original publication: https://arxiv.org/abs/2404.12241.

Further engagement

→ View all the frameworks included in the AI Risk Repository