This week we spotlight the twenty-fourth framework of risks from AI included in the AI Risk Repository: Gabriel, I., Manzini, A., Keeling, G., Hendricks, L. A., Rieser, V., Iqbal, H., Tomašev, N., Ktena, I., Kenton, Z., Rodriguez, M., El-Sayed, S., Brown, S., Akbulut, C., Trask, A., Hughes, E., Stevie Bergman, A., Shelby, R., Marchal, N., Griffin, C., … Manyika, J. (2024). The Ethics of Advanced AI Assistants. In arXiv. https://doi.org/10.48550/arXiv.2404.16244

Paper focus

This paper presents the ethical and societal risks of advanced AI assistants, defined as artificial agents with natural language interfaces that plan and execute sequences of actions on behalf of a user – across one or more domains – in line with the user’s expectations.

Included risk categories

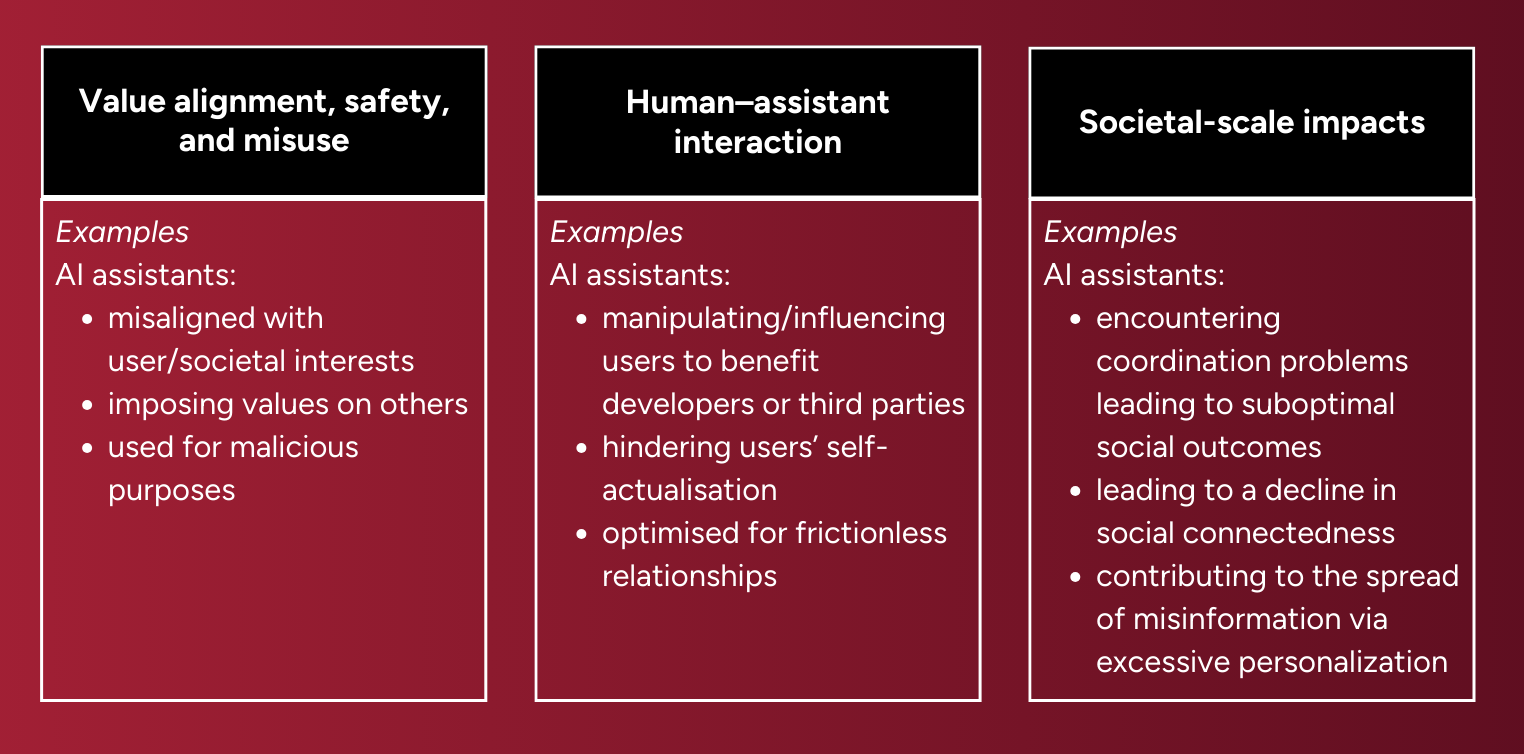

This paper provides an overview of AI challenges, as well as opportunities, organized into three main areas.

1. Value alignment, safety, and misuse: risks arising from the use of advanced AI assistants, involving topics such as value alignment, well-being, safety, and malicious use.

2. Human–assistant interaction: risks arising from the relationship between advanced AI assistants and users, involving topics such as influence, anthropomorphism, appropriate relationships, trust, and privacy.

3. Societal-scale impacts: risks associated with the deployment of technology at a societal level, involving topics such as cooperation, misinformation, equity/access, economic impact, and the environment.

Key features of the framework and associated paper

⚠️Disclaimer: This summary highlights a paper included in the MIT AI Risk Repository. We did not author the paper and credit goes to Iason Gabriel, Arianna Manzini, Geoff Keeling, and co-authors. For the full details, please refer to the original publication: https://arxiv.org/abs/2404.16244.

Further engagement

→ View all the frameworks included in the AI Risk Repository