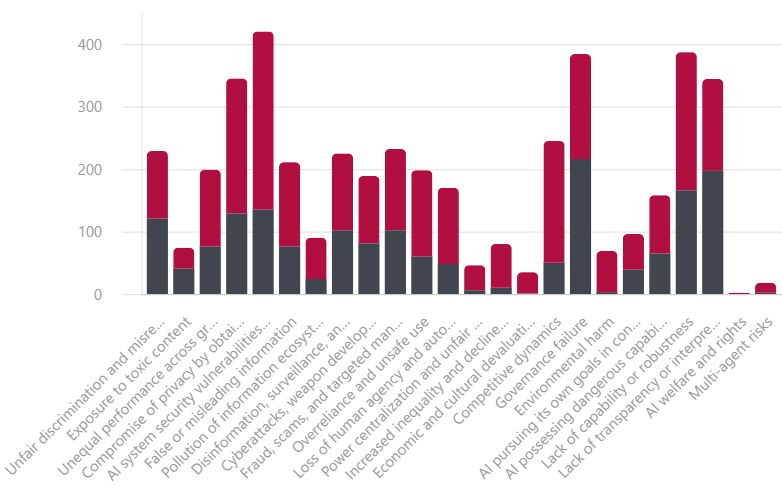

- A post-hoc framing of AI risks: Risks are mentioned most frequently during the ”Deploy” and “Operation and Monitor” stages of governance documents instead of the “Collect and Process Data” stage. This suggests that risk mitigation is addressed once the product is ready to launch rather than the initial stages of conception.

- Risks that pertain to a broad set of AI systems map to all AI lifecycle stages, while societal risks and risks specific to frontier AI are underrated or map to a limited number of AI lifecycle stages. “2.2 AI system security vulnerability and attacks”, “7.3 Lack of Capability or robustness”, “6.5 Governance failure”, “2.1 Compromise of privacy” and “7.4 Lack of transparency or interpretability” are the 5 most recognized risks at all six stages, and most of them relate to model safety.

- With a stronger focus on socioeconomic regulation on AI systems in the “Collect and Process Data” stage of creating AI products, we can frontload the burden of model safety risk mitigation and shape products with risk management as a requirement of the innovation process.

- Risks are mentioned most frequently during ”Deploy” and “Operation and Monitor” stages.

- Risk discussion is noticeably less present in the “Collect and Process Data” stage

- Conclusion: risk identification/diagnostics is considered after the product has been created rather than in the initial stages of conception

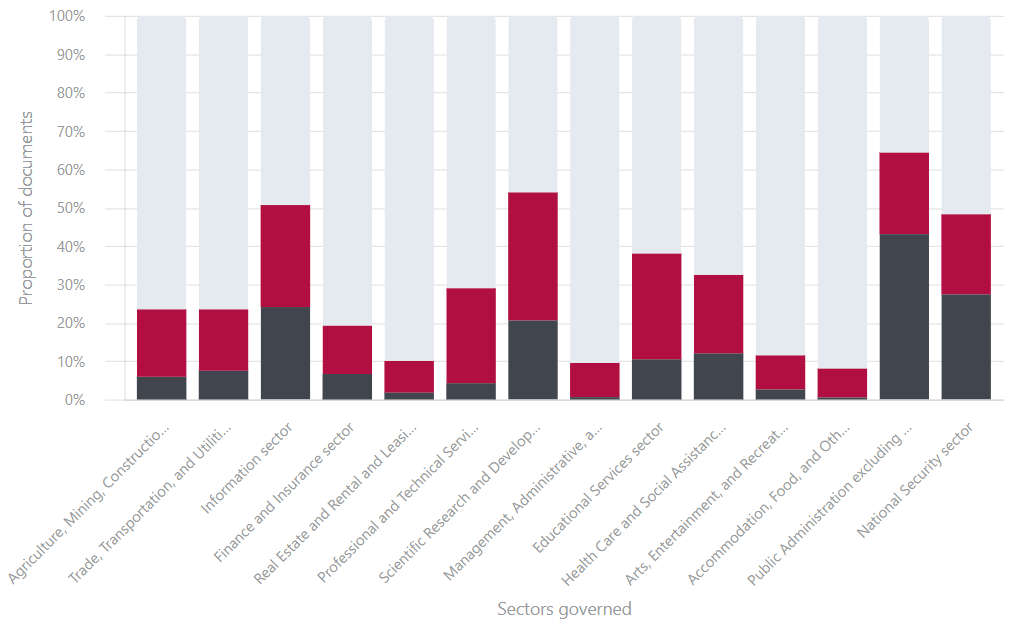

- Minimal Coverage Sectors:

- Accommodation, Food, and Other Services

- Arts, Entertainment, and Recreation

- Real Estate and Rental and Leasing

- Agriculture, Mining, Construction and Manufacturing

%20(250%20x%20100%20px).png)

.png)