AI Governance Map

Risk Domains

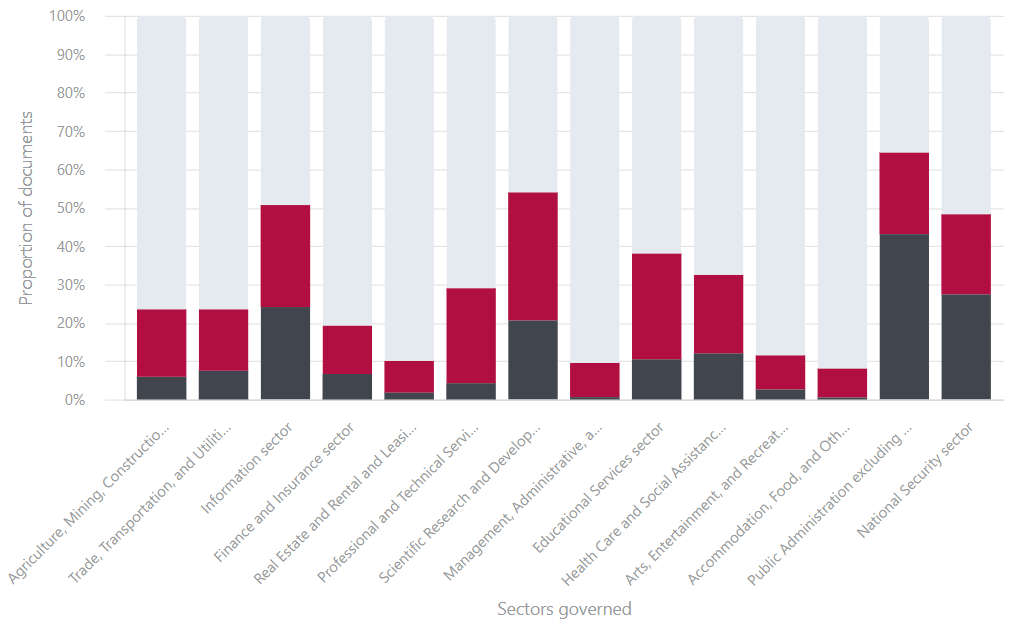

Insights

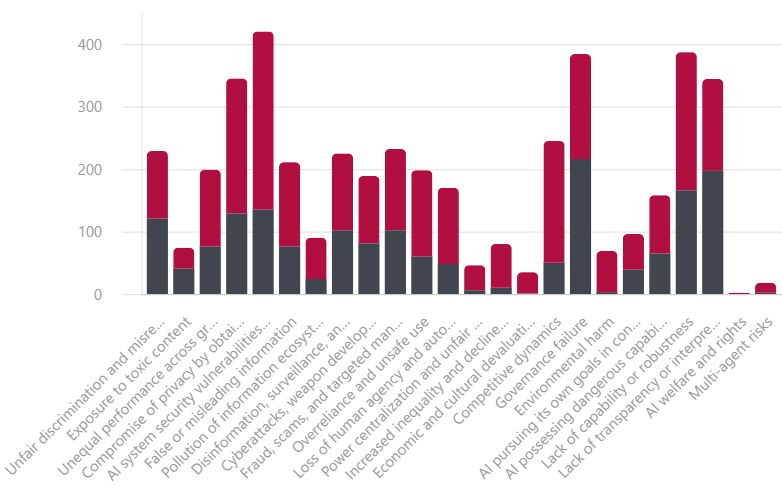

- The risk subdomains that are covered by the highest number of documents in the AGORA dataset are:

- 2.2 (AI system security vulnerabilities and attacks)

- 6.5 (Governance Failure)*

- 7.3 (Lack of Capability or robustness)

- 2.1 (Compromise of Privacy)

- 7.4 (Lack of transparency).

- The risk subdomains covered by the fewest documents in the AGORA dataset are:

- 7.5 (AI Welfare and Rights)

- 7.6 (Multi-agent risks)

- 6.3 (Economic and Cultural Devaluation)

- 6.1 (Power centralization)

- 3.2 (Environmental Harm)

- Minority vs majority focus of subdomains within AGORA dataset: Many minority subdomains focus on socioeconomic risks, which contrasts with the majority subdomains’ focus on model safety. The emphasis on model safety in the AGORA dataset suggests that legislators are willing to dedicate attention and resources towards supporting model safety rather than focusing on human centered risks such as AI Welfare and Rights.

- It should be noted that since AI governance is a relatively new topic for legislation and broad discussion, the lack of coverage for minority subdomains may stem from lack of attention to these subdomains as a whole rather than a conclusion about their representation in AI governance documents.

- Note on LLM treatment of “governance failure” subdomain: LLM understanding of “governance failure” is not correct enough so we cannot use the conclusion that “governance failure” is among the most covered risks*

Interactive

Explore how each risk subdomain is covered by the documents in the AGORA dataset. Different views present charts to show jurisdictional coverage, stages of the AI Lifecycle that the documents apply to, legislative status and more.

%20(250%20x%20100%20px).png)

.png)